HR decisions by AI need data about you. Let’s see where that could be. Your face is already in more databases than you would like. Your mobile number is being collected everywhere. Restaurants ask for it routinely (I don’t ever provide it). Security teams at offices and even at apartment complexes ask for it. Several offices jot down the details of your government id. The moment you use your credit card, the details of your purchase are sold to several buyers that like to build an intimate profile about you. If your photos have been uploaded by your friends on social media then your efforts at staying off the grid are futile.

When you apply for a job, you submit enormous detail to the potential employer. Your social media posts are screened In organizations, before you enter. Once you join, varying degrees of data gathering are in progress every day.

Marking attendance by biometric, face recognition and geo-fencing are slowly becoming the norm. The employer any case has your performance data, records of all your phone calls and emails. Your comments, likes and posts on the intranet or even the courses that you took, or did not complete reveal much more about you than you imagine.

Facebook Likes, can be used to automatically and accurately predict a range of highly sensitive personal attributes including: sexual orientation, ethnicity, religious and political views, personality traits, intelligence, happiness, use of addictive substances, parental separation, age, and gender. The analysis presented is based on a data set of over 58,000 volunteers who provided their Facebook Likes, detailed demographic profiles, and the results of several psychometric tests.

Computer models only needed about

100 likes to outperform an average human judge in their sample.

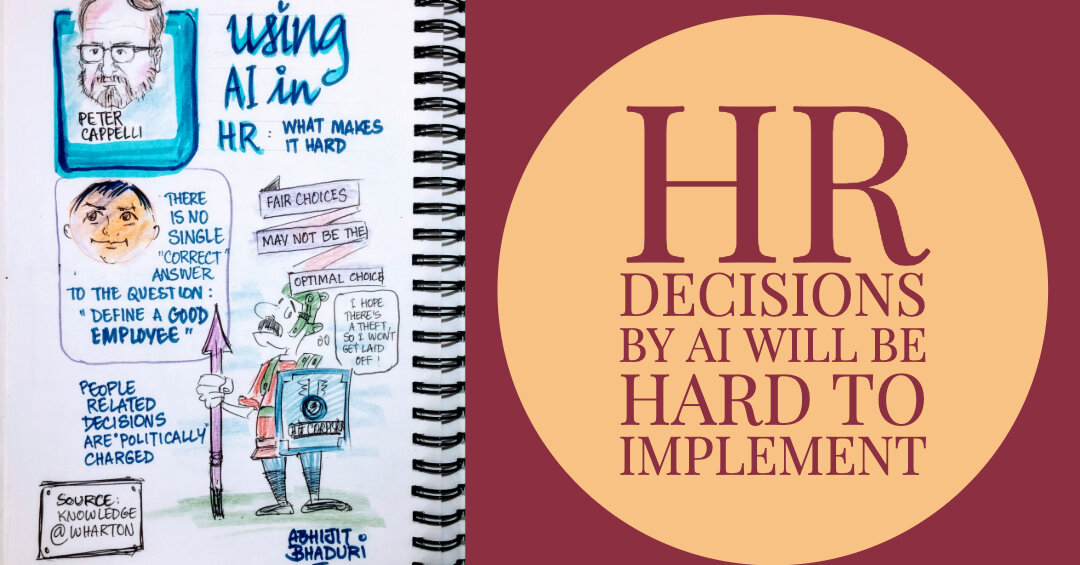

HR decisions by AI

HR teams in organizations are under pressure to start using AI in making decisions. This is a slippery slope. Human beings are biased. Imagine how many of our prejudices get triggered off simply by looking at information on the resume. The name tells us the gender of the person and in many cases the place of origin and much more. Knowing the year of graduation gives us an approximation of the age. The name of the college and the list of employers all trigger our prejudice.

Eliminating prejudice

In my book Don’t Hire the Best, I have shown that most organizations have never trained their interviewers. Nor do they ever test how accurately the interviewer has predicted performance of the candidates hired. Confidence and language proficiency is often seen as an indication of proficiency. It is not. Yet, interviews remain the basis of making most hiring decisions. When “blind” auditions have been used, more female musicians have been hired.

Being objective is not always right

Once we define an objective criteria, eg height, weight etc, the algorithm will clinically eliminate anyone who does not meet the standards. Imagine eliminating someone who misses the height requirement by a millimeter but more than makes up for it by sheer grit and resilience. AI does not care to go beyond the objective, universally acceptable standard that it has been told to look for.

What is optimal is not always fair

There is the old joke about an algorithm analyzing the utilization of musicians in a symphony. Given that the trumpet and saxophone were played for less than 30 seconds in the entire composition, they should be laid off. The algorithm may be right about utilization, but may not meet a music director’s vision. Imagine using the same algorithm to define elimination of some divisions of our Army because of their low utilization. What is optimal, is not always right.

In hiring someone, the algorithm needs a human to define what the “correct answer” to look for. What should the ideal candidate be? What criteria should the machine look for, while comparing two candidates? While the machine may recommend a candidate who has a higher degree (eg a candidate with a Masters degree is preferred to a candidate with a Bachelors), it may eliminate a candidate who is a better team player because that is hard to define.

As HR is under pressure to eliminate human bias by using algorithms, the decision suggested by the algorithm may be objective and easy to defend, but may be hard to implement if it is seen to be “unfair”. What is fair to me may be unfair to you. That seems to be the path HR is being goaded to adopt.

Be careful what you wish for, it may come true.

——

Leave a Reply to Judith Cancel reply